July 31, 2006

Notes from a talk about DiamondTouch

I went to another University of Colorado computer science colloquium last week, covering Selected HCI Research at MERL Technology Laboratory. I've blogged about some of the talks I've attended in the past.

This talk was largely about the DiamondTouch, but an overview of Mitsubishi Electronic Research Laboratories was also given. The DiamondTouch is essentially a tablet PC writ large--you interact through a touch screen. The biggest twist is that the touch screen can actually differentiate users, based on electrical impulses (you sit on special pads which, I believe, generate the needed electrical signatures). To see the DiamondTouch in action, check out this YouTube movie showing a user playing World Of Warcraft on a DiamondTouch. (For more on YouTube licensing, check out the latest Cringely column.)

What follows are my loosely edited notes from the talk by Kent Wittenburg and Kathy Ryall.

[notes]

First, from Kent Wittenburg, one of the directors of the lab:

MERL is a research lab. They don't do pure research--each year they have a numeric goal of business impacts. Such impacts can be a standards contribution, a product, or a feature in a product. They are associated with Mitsubishi Electric (not the car company).

Five areas of focus:

- Computer vision--2D/3D face detection, object tracking

- Sensor and data--indoor networks, audio classification

- Digital Communication--UWB, mesh networking, ZigBee

- Digital Video--MPEG encoding, highlights detection, H.264. Interesting anecdote--realtime video processing is hard, but audio processing can be easier, so they used audio processing to find highlights (GOAL!!!!!!!!!!!!) in sporting videos. This technology is currently in a product distributed in Japan.

- Off the Desktop technologies--touch, speech, multiple display calibration, font technologies (some included in Flash 8), spoken queries

The lab tends to have a range of time lines--37% long term, 47% medium and 16% short term. I think that "long term" is greater than 5 years, and "short term" is less than 2 years, but I'm not positive.

Next, from Kathy Ryall, who declared she was a software person, and was focusing on the DiamondTouch technology.

The DiamondTouch is multiple user, multi touch, and can distinguish users. You can touch with different fingers. The screen is debris tolerant--you can set things on it, or spill stuff on it and it continues to work. The DiamondTouch has legacy support, where hand gestures and pokes are interpreted as mouse clicks. The folks at MERL (and other places) are still working on interaction standards for the screen. The DiamondTouch has a richer interaction than the mouse, because you can use multi finger gestures and pen and finger (multi device) interaction. It's a whole new user interface, especially when you consider that there are multiple users touching it at one time--it can be used as a shared communal space; you can pass documents around with hand gestures, etc.

It is a USB device that should just plug in and work. There are commercial developer kits available. These are available in C++, C, Java, Active X. There's also a Flash library for creating rapid prototype applications. DiamondSpin is an open source java interface to some of the DiamondTouch capabilities. The folks at MERL are also involved right now in wrapping other APIs for the DiamondTouch.

There are two sizes of DiamondTouch--81 and 107 (I think those are the diagonal measurements). One of these tables costs around $10,000, so it seems limited to large companies and universities for a while. MERL is also working on DiamondSpace, which extends the DiamondTouch technology to walls, laptops, etc.

[end of notes]

It's a fascinating technology--I'm not quite sure how I'd use it as a PC replacement, but I could see it (once the cost is more reasonable) but I could see it as a bulletin board replacement. Applications that might benefit from multiple user interaction and a larger screen (larger in size, but not in resolution, I believe), like drafting and gaming, would be natural for this technology too.

How green is your computer?

Find out at the Electronic Product Environmental Assessment Tool.

July 14, 2006

Another RSS To Email site

Along the lines of Squeet, which I mentioned previously, it looks like Craig2Mail does a good job of converting RSS feeds to emails.

July 05, 2006

Paper examining on the fly compression

I found this paper (PDF), while a bit old (from 2002), to be a useful analysis of on the fly compression (a la mod_gzip or mod_deflate).

June 21, 2006

How to receive craigslist searches via email

craigslist is an online classified ad service, with everything from personals to real estate to bartering offered online. I've bought a table from Denver's craigslist and I know a number of folks who have found roommates via craigslist.

If you have a need that isn't available right now, you can subscribe to a search of section of craigslist. Suppose you're looking for a used cruiser bike in Denver, you can search for cruisers and check out the current selection. If you don't like what you see but don't want to keep coming back, you can use the RSS feed link for the search, which is at the lower right corner. Put this link into your favorite RSS reader (this is a simple application that manages RSS feeds, which are essentially lists of links. I'd recommend Bloglines but there are many others out there) and you can be automatically apprised of any new cruisers which are posted.

(You find tons of stuff via RSS--stock quotes, job listings, paparazzi photos... The list is endless.)

If you don't want to deal with yet another application, or you're not always in your RSS reader (like me), you can set up an RSS to email gateway. That way, if your cruiser bike search is so urgent you don't want to let a good deal get away, you receive notification of a new posting relatively quickly. If you want, you can even email it to your mobile phone.

The basic steps:

- Go to the Squeet signup page. Sign up for a free account. Don't forget to verify it--they'll send an email to the address you give them.

- Open up a new browser window and go to craigslist, choose the city/section you are interested in, and do a search. The example up above was 'cruiser', in the bike section of the Denver CL.

- Scroll down to the bottom of the search results and right click on the RSS link. Choose either 'Copy Shortcut' or 'Copy Link Location', depending on your browser.

- Switch back to the Squeet window, and click in the 'FEED URL' box. Paste in the link you just copied. Choose your notification time period--I'd recommend a frequency of 'live', since cruiser bikes in the Denver area tend to move pretty quick. Then click the subscribe button.

That's it. Just wait for the emails to roll in and soon enough you'll find the cruiser bike of your dreams. Just be aware that it's not real time--I've seen lags from 30 minutes to 2 hours from post to email. Still, it's a lot easier that clicking 'Refresh' on your browser all day and night.

June 20, 2006

Google offers geocoding

Google now offers geocoding services. Up to 50,000 addresses a day. I built a geocoding service from the Tiger/Line database in the past. Comparing its results with the Google geocoding results, and Google appears to be a bit better. I've been looking around the Google Maps API discussion group and the Google Maps API Blog and haven't found any information on the data sources that the geocoding service uses or the various levels of precision available.

June 09, 2006

Naymz.com Launched

A friend has been working on a startup which looks like it's been focusing on identity management on the internet. That startup, Naymz, launched today. I just joined--check out my page. It'll be interesting to see if this site fills a need.

June 06, 2006

Google does spreadsheets

Check out spreadsheets.google.com. Limited time look at what javascript can do for a spreadsheet. I took a quick look and it seems to fit large chunks of what I use Excel or calc, the OpenOffice spreadsheet program, for. Just a quick tour of what I such spreadsheet programs for, and what Google spreadsheet supports:

- cut and paste, of text and formulas

- control arrow movement and selection

- formatting of cells

- merging of cells and alignment of text in cells

- undo/redo that goes at least 20 deep

- sum/count

- can freeze rows

- share and save the spreadsheet

- export to csv and xls

On the other hand, no:

- dragging of cells to increment them (first cell is 45, next is 46, 47...).

- using the arrows to select what goes into a formula--you can type in the range or use the mouse

Pretty decent for a web based application. And it does have one killer feature--updates are immediatly propagated (I have never tried to do this with a modern version of Excel, so don't know if that's standard behaviour). Snappy enough to use, at least on my relatively modern computer. I looked at the js source and it's 55k of crazy javascript (Update, 6/9: This link is broken.). Wowsa.

I've never used wikicalc but it looks more full featured that Google spreadsheets. On the other hand, Google spreadsheets has a working beta version...

This and the acquisition of writely make me wonder if some folks are correct when they doubt that Google will release a software productivity suite. (More here.) Other interesting comments from Paul Kedrosky.

I know more than one person that absolutely depend on gmail for business functionality, which spooks me. And in some ways, I agree with Paul, it appears that Google "...takes a nuclear winter approach wherein it ruins markets by freezing them and then cutting revenues to zero."

Personally, if I don't pay for something, I'm always leery of it being taken away. Of course, if I pay, the service can also go away, but at least I have some more leverage with the company--after all, if they take the service away, they lose money.

May 25, 2006

Bloglines and SQL

I moved from my own personal RSS reader (coded in perl by yours truly) to Bloglines about a year ago. The main reason is that Bloglines did everything my homegrown reader did and was free (in $ and in time to maintain it).

But with over 1 billion articles served as of Jan 2006, I always wondered why Bloglines didn't do more collaborative filtering. They do have a 'related feeds' tab, but it doesn't seem all that smart (though it does seem to get somewhat better as you have more subscribers). I guess there are a number of possible reasons:

- It's easier to find feeds that look like they'd be worth reading (I have 180 feeds that I attempt to keep track of)

- blogrolls provide much of this kind of filtering at the user level

- privacy concerns?

- No demand from users

But this article, one of a series about data management in well known web applications, gives another possible answer: the infrastructure isn't set up for easy querying. Sayeth Mark Fletcher of bloglines:

As evidenced by our design, traditional database systems were not appropriate (or at least the best fit) for large parts of our system. There's no trace of SQL anywhere (by definition we never do an ad hoc query, so why take the performance hit of a SQL front-end?), we resort to using external (to the databases at least) caches, and a majority of our data is stored in flat files.

Incidentally, all of the articles in the 'Database War Stories' series are worth reading.

Using Grids?

Tim Bray gives a great write up of Grid Infrastructure projects. But he still doesn't answer Stephen's question: what is it good for?

I think the question is especially relevant for on demand 'batch grids', to use Tim's terms. A 'service grid' has uses that jump to mind immediately; scaling web serving content is one of them. But on demand batch grids (I built an extremely primitive one in college) are good for complicated processes that take a long time. I don't see a lot of that in my current work--but I'm sure my physics professor would be happy to partake.

May 03, 2006

Owen Taylor Blogging

Owen Taylor, who I pleaded (in person and in this blog) to start blogging, has done so. This is Owen Taylor's Weblog. If you're interested in jini, javaspaces or random technological musings, give it a look. Welcome, Owen!

April 19, 2006

apachebench drops hits when the concurrency switch is used

I've used apachebench (or ab), a free load testing tool written in C and often distributed with the Apache Web Server, to load test a few sites. It's simple to configure and gives tremendous throughput. (I was seeing about 4 million hits an hour over 1 gigabit ethernet. I saw about 10% of that from jmeter on the same machine; however, the tests that jmeter was running were definitely more compex.)

Apachebench is not perfect, though. The downsides are that you can only hit one url at a time (per ab process). And if you're trying to load test the path through a system ("can we have folks login, do a search, view a product and logout"), you need to map that out in your shell script carefully. Apachebench has no way to create more complicated tests (like jmeter can). Of course, apachebench doesn't pretend to be a system test tool--it just hits a set of urls as fast as it can, as hard as it can, just like a load tool should.

However, it would be nice to be able to compare hits recieved on the server side and the log file generated by apachebench; the numbers should reconcile, perhaps with some fudge factor for network errors. I have found that these numbers reconcile as long as you only have one client (-c 1, or the default). Once you start adding clients, the server records more hits than apachebench. This seems to be deterministic (that is, repeatable), and worked out to around 4500 extra requests for 80 million requests. As the number of clients approached 1, the discrepancy between the server and apachebench numbers decreased as well.

This offset happened with Tomcat 5 and Apache 2, so I don't think that the issues is with the server--I think apachebench is at fault. I searched the httpd bug database but wasn't able to find anything related. Just be aware that apachebench is very useful for generating large HTTP request loads, but if you need to reconcile for accuracy, skip the concurrency offered.

April 08, 2006

The Eolas Matter, or How IE is Going to Change 'Real Soon Now'

Do you use <object> functionality in your web application? Do you support Microsoft Internet Explorer?

If so, you might want to take a look at this: Microsoft's Active X D-Day, which details Microsoft's plans to change IE to deal with the Eolas lawsuit. Apparently the update won't be critical, but eventually will be rolled into every version of IE.

Here's a bit more technical explanation of what how to fix object embedding from Microsoft, and a bit of history from 2003.

Via Mezzoblue.

March 22, 2006

A survey of geocoding options

I wrote a while back about building your own geocoding engine. The Tiger/Line dataset has some flaws as a geocoding service, most notably that once you get out of urban areas many addresses simply cannot be geocoded.

Recently, I was sent a presentation outlining other options (pdf) which seems to be a great place to start an evaluation. The focus is on Lousiana--I'm not sure how the conclusions would apply to other states' data.

March 20, 2006

Newsgator goes mobile

Congratulations to Kevin Cawley, whose mobile products company has been acquired by Newsgator. I know Kevin peripherally (we talked about J2ME once or twice) and wish him luck in his new job.

Folks whose opinion I respect really like Newsgator for RSS aggregation; it'll be interesting to see how they react when Outlook 12 is released with RSS aggregation built in.

March 14, 2006

Full content feeds and Yahoo ads

I changed the Movable Type template to include full content on feeds. Sorry for the disruption (it may have made the last fifteen entries appear new).

I think sending full content in the feeds (both RSS1 and RSS2) goes nicely with the Yahoo Ads I added a few months ago. Folks who actually subscribe to what I say shouldn't have to endure ads, while those who find the entries via a search engine can endure some advertising. (Russell Beattie had a much slicker implementation of this idea a few years ago.)

More about the ads: I think that they're not great, but I think that's due to my relative lack of traffic--because of the low number of pageviews, Yahoo just doesn't (can't) deliver ads that are very targeted. (I've seen a lot of 'Find Dan Moore'). It's also a beta service (ha, ha). Oh well--it has paid me enough to go to lunch (but I'll have to wait because they mail a check only when you hit $100).

As long as we're doing public service announcements, I've decided to turn off comments (from the initial post, rather than only on the old ones). Maybe it's because I'm posting to thin air, or because I'm not posting on inflammatory topics, or because comment spam is so prevalent, but I'm not getting any comments anymore (I think 5 in the last 6 months). So, no more comments.

And that's the last blog about this blog you'll see, hopefully for another year.

February 19, 2006

Choosing a wiki

I am setting up a wiki at work. These are our requirements:

- File based storage--so I don't have to deal with installing a database on the wiki server

- Authentication of some kind--so that we can know who made changes to what document

- Versioning--so we can roll back changes if need be.

- PHP based--our website already runs php and I don't want to deal with alternate technologies if I don't have to.

- Handles binary uploads--in case someone had a legacy doc they wanted to share.

- Publish to PDF--so we can use this wiki for more formal documents. We won't publish the entire site, but being able to do this on a per document basis is required.

I started with PHPWiki but its support for PDF was lacking. (At least, I couldn't figure it out, even though it was documented.)

After following the wizard process at WikiMatrix (similar to CMSMatrix, which I've touched on before), I found PmWiki, which has support for all of our requirements. It also seems to have a nice extensible architecture.

Installation was a snap and after monkeying around with authentication and PDF installation (documented here), I've added the following lines to my local/config.php:

include_once('cookbook/pmwiki2pdf/pmwiki2pdf.php');

$EnablePostAuthorRequired = 1;

$EnableUpload = 1;

$UploadDir = "/path/to/wiki/uploads";

$UploadUrlFmt = "http://www.myco.com/wiki/uploads";

$UploadMaxSize = 100000000; // 100M

February 03, 2006

Google tricks

Not only can RSS get you a job and Google spare you from remembering URLs, but combining them lets you find when your namesake is in the news. Via the Justin Pfister RSS generator and Q Digital Studio.

February 02, 2006

Testing XMLHttpRequest's adherence to HTTP response codes

mnot has a set of tests looking at the behaviour of XMLHttpRequest as it follows various HTTP responses. Some of it is pretty esoteric (how many folks are using the DELETE method?--oh wait). But all in all it's interesting, especially given the buzz surrounding AJAX, of which XMLHttpRequest is a fundamental part.

February 01, 2006

Scott Davis pleads "Evolve" to Microsoft

An eloquent plea which points out some of the complexities of Microsoft's current situation. Microsoft, which had revenues of $9.74 billion in 2006 Q1 (with operating income of $4.05 billion), certainly isn't an industry darling anymore. I'm sure someone in Redmond is well aware that:

Once stalled, no U.S. company larger than $15 billion has been able to restart sustained double digit internal growth.

January 26, 2006

New bloggers

A couple of folks I've worked with in the past have begun blogging (or, have let me know they were blogging). They aren't developers, but do deal with the software world. (I'm shocked to note that both of their blogs are much snazzier than mine.)

Susan Mowery Snipes does web design. Her blog is company news and also exhibits the perspective of a UI focused designer--sometimes it's a bit through the looking glass (why would someone judge a website in 1/20 of second), but that's good for us software folks to take a look at.

And if a designer has a different view, a project manager is on a different planet. I admire the best PMs because they are able to manage even though they might not have any clue about technology details. Come to think of it, that may actually help. Regardless, Sarah Gilbert has been blogging for a while, but just shared the URL with me. She's an excellent writer and I am looking forward to reading more entries like Trust Me, You Don't Do Everything. I do wish she'd allow comments, though.

January 19, 2006

Software Licensing Haiku

I thought this list of software licensing haikus was pretty funny.

Kinda old, thought I'd update with some other licenses:

Apache: not the GPL! / we let you reuse to sell / you break it you buy

Creative Commons: choose one from many / confused? we will help whether / simple or sample

Berkley: do not remove the / notice, nor may you entangl' / berkeley in your mess

Artistic: tell all if you change / package any way you like / keep our copyright

January 08, 2006

Yahoo Ads

As you may have noticed, especially if you use an RSS reader, I've installed Yahoo Ads beta program on some of my pages. For the next few months, I plan to run Yahoo Ads on the individual article pages, as well as my popular JAAS tutorial.

I've not done anything like this before, but since I write internet software, and pay per click advertising is one of the main ways that such software makes money, I thought it'd be an interesting experiment. Due to the dictatorial Term and Conditions, I probably won't comment on any other facets of the ads in the future....

Please feel free to comment if you feel that the ads are unspeakably gauche--can't say I've been entirely happy with their targeting.

December 20, 2005

Mozilla, XPCOM and xpcshell

Most people know about mozilla through Firefox, their IE browser replacement. (Some geeks may remember the Netscape source code release.) But mozilla is a lot more than just a browser--there's an entire API set, XPCOM and XUL, that you can use to build applications. (There are books about doing so, but mozilla development seems to run ahead of them.) I'm working on a project that needs some custom browser action, so looking at XPCOM seemed a wise idea.

XPCOM components can be written in a variety of languages, but most of the articles out there focus on C++. While I've had doubts about scripting languages and large scale systems, some others have had success heading down the javascript path. I have no desire to delve into C++ any more than I have to (I like memory management), so I'll probably be writing some javascript components. Unfortunately, because XPCOM allows javascript to talk to C++, I won't be able to entirely avoid the issue of memory management.

xpcshell is an application bundled with mozilla that allows me to interact with mozilla's platform in a very flexible manner. It's more than just another javascript shell because it gives me a way to interact with the XPCOM API (examples). To install xpcshell (on Windows) make sure you download and install the zip file, not the Windows Installer. (I tried doing the complete install and the custom install, and couldn't figure out a way to get the xpcshell executable.)

One cool thing you can do with xpcshell is write command line javascript scripts. Putting this:

var a = "foobar";

print(a);

a=a.substr(1,2);

print(a);in a file named

test.js gives this output:

$ cat test.js | ./xpcshell.exe

foobar

oo

Of course, this code doesn't do anything with XPCOM--for that, see aforementioned examples.

I did run into some library issues running the above code on linux--I needed to execute it in the directory where xpcshell was installed. On windows that problem doesn't seem to occur.

A few other interesting links: installing xpcshell for firefox, firefox extensions with the mozilla build system, a javascript library easing XPCOM development, and another XPCOM reference.

December 01, 2005

Set up your own geocode service

Update, 2/9/06: this post only outlines how to set up a geocode engine for the United States. I don't know how to do it for any other countries.

Geocoder.us provides you with a REST based geocoding service, but their commercial services are not free. Luckily, the data they use is public domain, and there are some helpful perl modules which make setting up your own service a snap. This post steps you through setting up your own geocoding service (for the USA), based on public domain census data. You end up with a Google map of any address in the USA, but of course the lat/long you find could be used with any mapping service.

First, get the data.

$ wget -r -np -w 5 --random-wait ftp://www2.census.gov/geo/tiger/tiger2004se/

If you only want the data for one state, put the two digit state code at the end of the ftp:// url above (eg ftp://www2.census.gov/geo/tiger/tiger2004se/CO/ for Colorado's data).

Second, install the needed perl modules. (I did this on cygwin and linux, and it was a snap both times. See this page for instructions on installing to a nonstandard location with the CPAN module and don't forget to set your PERL5LIB variable.)

$ perl -MCPAN -e shell

cpan> install S/SD/SDERLE/Geo-Coder-US-1.00.tar.gz

cpan> install S/SM/SMPETERS/Archive-Zip-1.16.tar.gz

Third, import the tiger data (this code comes from the Geo::Coder::US perldoc, and took 4.5 hours to execute on a 2.6ghz pentium4 with 1 gig of memory). Note that if you install via the CPAN module as shown above, the import_tiger_zip.pl file is under ~/.cpan/:

$ find www2.census.gov/geo/tiger/tiger2004se/CO/ -name \*.zip

| xargs -n1 perl /path/to/import_tiger_zip.pl geocoder.db

Now you're ready to find the lat/long of an address. Find one that you'd like to map, like say, the Colorado Dept of Revenue: 1375 Sherman St, Denver, CO.

$ perl -MGeo::Coder::US -e 'Geo::Coder::US->set_db( "geocoder.db" );

my($res) = Geo::Coder::US->geocode("1375 Sherman St, Denver, CO" );

print "$res->{lat}, $ res->{long}\n\n";'

39.691702, -104.985361

And then you can map it with Google maps.

Now, why wouldn't you just use Yahoo!'s service (which provides geocoding and mapping APIs)? Perhaps you like Google's maps better. Perhaps you don't want to use a mapping service at all, you just want to find lat/longs without reaching out over the network.

November 03, 2005

Article on open formats

Gervase Markham has written an interesting article about open document formats. I did a bit of lurking on the bugzilla development lists for a while and saw Gervase in action--quite a programmer and also interested in the end user's experience. I think he raises some important issues--if html had been owned by a company, the internet (as the web is commonly known, even though it's only a part of the internet) would not be where it is today. If Microsoft Word (or WordPerfect) had opened up their document specification (or worked with other interested parties on a common one), other companies could have competed on features and consumers would have benefited. More on OpenDocument, including a link to a marked up version of a letter from Microsoft regarding the standard.

September 28, 2005

TheOnion on the 'WikiConstitution'

Seems like the Wikipedia model isn't for everyone: Congress abandons WikiConstitution.

August 07, 2005

Singing the praises of vmware

In the past few months, I've become a huge fan of vmware, the Workstation in particular. If you're not familiar with this program, it provides a virtual machine in which you can host an operating system. If you're developing on Windows, but targeting linux, you can run an emulated machine and deploy your software to it.

The biggest, benefit, however, occurs when starting a project. I remember at a company I worked at a few years ago, I was often one of the first people on a project. Since our technology stack often changed to meet the clients' needs, I usually had to learn how to install and troubleshoot a new piece of server software (ATG Dynamo, BEA Weblogic, Expresso, etc). After spending a fair amount of time making sure I knew how to install and deploy the new platform, I then wrote up terse yet complete (hopefully) installation documents for the future members of the team as the project rolled into development. Of course, that was not the end of it; there were slight differences in environment and user capability which meant that I was a resource for the rest of the team regarding platform configuration.

These factors made me a strong proponent of server based development, where you buy a high powered box and everyone develops (via CVS, samba or some other network protocol) and deploys (via a virtual server for each developer) to that box. Of course, setting it up is a hassle, but once it's done, adding new team members is not too much of a hassle. Compare this with setting up a windows box to do java development, and some of the complications that can ensue due to the differing environments.

But vmware changes the equation. Now, I, or someone like me, can create a development platform from scratch that includes everything from the operating system up. Combined with portable hard drives, which have become absurdly cheap (many gig for a few hundred bucks) you can distribute the platform to a new team member in less than hour. He or she can customize it, but if they ruin the image, it's easy enough to give the developer another copy. No weird operating system problems and no complicated dev server setup. This software saves hours and hours of development time and lets developers focus on code and not configuration. In addition, you can actually do development on an OS with a panoply of tools (Windows) and at the same time test deployment to a serious server OS (a UNIX of some kind).

However, there are still some issues to be aware of. That lack of knowledge often means that regular programmers can't help debug complicated deployment issues. Perhaps you believe that they shouldn't need to, but siloing the knowledge in a few people can lead to issues. If they leave, knowledge is lost, and other team members have no place to start when troubleshooting problems. This is, of course, an issue with or without vmware, but with vmware regular developers really don't even think about the install process; with an install document, they may not fully understand what they're doing, but probably have a bit more knowledge regarding deployment issues.

One also needs to be more vigilant than ever about keeping everything in version control; if the vmware platforms diverge, you're back to troubleshooting different machines. Ideally, everyone should mount a local directory as a network drive in vmware and use their own favorite development tools (Eclipse, netbeans, vi, even [shudder] emacs) on the host. Then each team member can deploy on the image and rest assured that, other than the code or configuration under development, they have the exact same deployment environment as everyone else.

In addition, vmware is a hog. This is to be expected, since it has an entire operating system to support, but I've found that for any real development, 1 gig of RAM is the absolute minimum. And if you have more than one image running at the same time, 1 gig is not enough. A fast processor is needed as well.

Still, for getting development off to a galloping start, vmware is a fantastic piece of software. Even with the downsides, it's worth a look.

July 13, 2005

Exchanging PostgreSQL for Oracle

I have a client who was building some commercial software on top of PostgreSQL. This plans to be a fairly high volume site, 1.8 million views/hour <=> 500 hits a second. Most of the software seemed to work just fine, but they had some issues with Postgres. Specifically, the backup was failing and we couldn't figure out why. Then, a few days ago, we saw this message:

ERROR: could not access status of transaction 1936028719

DETAIL: could not open file "/usr/local/postgres/data/pg_clog/0836": No such file or directory

After a bit of searching, I saw two threads suggesting fixes, which ranged from deleting the offending row to recreating the entire database.

I suggested these to my client, and he thought about it for a couple of days and came up with a solution not suggested on these threads: move to Oracle. Oracle, whose licensing and pricing has been famously opaque, now has a pricing list available online, with prices for the Standard Edition One and Enterprise Edition versions of their database, as well as other software they sell. And my client decided that he could stomach paying for Oracle, given:

1. The prices aren't too bad.

2. The amount of support and knowledgeable folks available for Oracle dwarfs the community of Postgres.

3. He just wants something to work. The value add of his company is in his service, not in the back end database (as long as it runs).

I can't fault him for his decision. PostgreSQL is full featured, was probably responsible for Oracle becoming more transparent and reasonable in pricing, and I've used it in the past, but he'd had enough. It's the same reason many folks have Macs or Windows when there is linux, which is a free tank that is "... invulnerable, and can drive across rocks and swamps at ninety miles an hour while getting a hundred miles to the gallon!".

I'll let you know how the migration goes.

June 20, 2005

Breaking WEP: a Flash Presentation

About two years ago, I wrote about how to secure your wireless network by changing your router password, your SSID, and turning on WEP. Regarding WEP, I wrote:

This is a 128 bit encryption protocol. It's not supposed to be very secure, but, as my friend said, it's like locking your car--a thief can still get in, but it might make it hard enough to not be worth their while.

Now, some folks have created a flash movie showing just how easy it is to break WEP. Interesting to watch, and has a thumping soundtrack to boot.

Via Sex, Drugs, and Unix.

June 17, 2005

Blogging and Legal Issues

Any new technology needs to fit into existing societal infrastructures, whether it's the printing press or the closed circuit TV system. Blogging is no different. I sometimes blog about what I get paid to work on, but always check with my employer first to make sure they're comfortable with it. Since I'm a developer, it's often general solutions to problems, and if need be, I omit any identifying information. Some folks take it farther..

Now, our good friends at the EFF have produced a legal guide for bloggers, which looks to be very useful, but is aimed only at those who live in the United States of America.

June 15, 2005

Search engine hits: a logfile analysis

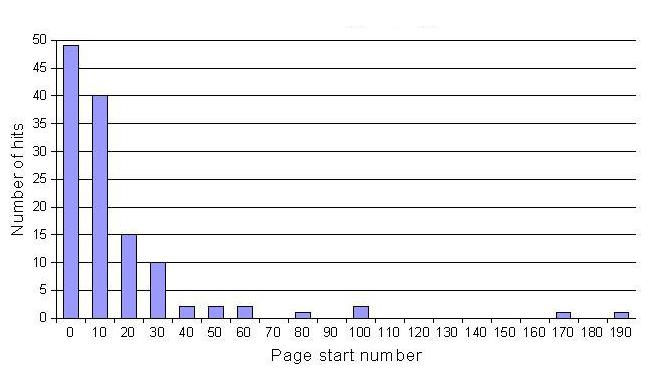

I get most of my website hits on two posts: Yahoo Mail Problems and Using JAAS for Authentication and Authorization. It's common knowledge that if your business "does not rank in the top 20 spots on the major search engines, you might as well be in the millionth ranking spot", but that's apparently not strictly true for content. I looked at my webserver logs over a 42 hours stretch, when I got 125 hits from search engines, and looked at the start parameter, which generally indicates what page the results were on (0 is typically the first 10 results, 10 is the second 10, etc).

Here's the graph of my results:

I have to admit I'm suprised by the number of hits beyond the first 20 results (columns 0 and 1)... 71.2% were from the first two pages, but that measn that 28.8% were from deeper in the search engine results. And someone went all the way to page 20--looking for a "servlet free mock exam" if you must know.

Interesting, for sure. Not that I'm claiming this is a long tail.

June 07, 2005

Useful Tools: wget

I remember writing a spidering program to verify url correctness, about six years ago. I used lwp and wrote threads and all kinds of good stuff. It marked me. Used to be, whenever I want to grab a chunk of html from a server, I scratch out a 30 line perl script. Now I have an alternative. wget (or should it be GNU wget?) is a fantastic way to spider sites. In fact, I just grabbed all the mp3s available here with this command:

wget -r -w 5 --random-wait http://www.turtleserviceslimited.org/jukebox.htm

The random wait is in there because I didn't want to overwhelm their servers or get locked out due to repeated, obviously nonhuman resource requests. Pretty cool little tool that can do a lot, as you can see from the options list.

June 06, 2005

IVR UI Guidelines

I was just complaining today to some friends that IVR systems (interactive voice recognition, or, the annoying female voice who 'answers' the phone and tries to direct you to the correct department when you call your credit card company) need some guidelines because it seems like every system does things just a little bit differently--enough to annoy the heck out of me. Well, lo and behold, google knows. Here is a paper on the topic and here's a coffee talk on the topic by a former coworker (today must be a day for references to former coworkers).

Some of my frustrations with IVR systems are due to the very market forces that drive companies to use them (making it hard to reach an operator helps when trying to cut labor costs) and some are due to limitations on audio as an information conveyance (typically, reading is quicker than listening).

New tech comic

Jut got an email from an old coworker who used to do some pretty great comic strips. (Nothing nationally syndicated that I know of.) He's started a new one, Bug Bash, that is 'updated weekly, technology-focused, and based loosely on my experiences at "a large northwest software company." '

Take a look...

May 26, 2005

Mail filtering

Here's a very interesting article for the sysdamins among us on how to fix the spam problem, at least for one site. The guy has received one million spams a day. Wow. It's worth struggling through the frames layout to find out how he fixes the problem.

May 11, 2005

Installing eRoom 7 on Windows XP Pro

This is a quick doc explaining how to install eRoom 7 on Windows XP Professional. It assumes that Windows XP Pro is installed, and you have the eRoom 7 setup program downloaded. This is based on the events of last week, but I believe I remembered everything.

1. Install IIS.

2. Make sure the install account has the 'Act As Part Of The Operating System' privilege. Do this by opening up your control panel (changing to the classic view if need be), double clicking Adminstrative Tools, then Local Security Policy, then expanding the Local Policies node, then clicking the User Rights Assignment node. Double click on 'Act as part of the operating system' (it's the 2nd entry on my list) and add the user that will be installing eRoom.

3. Restart.

4. Run the eRoom setup program. At the end, you'll get this message:

Exception number 0x80040707

Description: Dll function call crashed ISRT._DoSprintf

5. Re-register all your eRoom dlls by opening up a cmd window, cding to C:\Program Files\eRoom\eRoom Server\ and running

regsvr32.exe [dllname]

for each dll in that directory.

6. Run the eRoom MMC plugin: Start Menu, Run, "C:\Program Files\eRoom\eRoom Server\ERSAdmin.msc"

You should then be able to create a site via this screen.

May 05, 2005

Another use for Google maps

Why didn't I think of this first? Combining Google Maps and Craigslist, this site makes looking at rentals fun and easy. Wow!

Via OK/Cancel.

March 26, 2005

Metafor: Using English to create program scaffolding

Continuing the evolution of easier-to-use computer programming (a lineage which includes tools ranging from assembly language to the spreadsheet), Metafor is a way to build "the scaffolding for a program." This doesn't mean that programmers will be out of work, but such software sketching might help to bridge the gap between programmers and non-programmers, in the same way that VBA helped bridge that gap. (I believe that naked objects attacks a similar problem from a different angle.) This obviously has implications for novices and folks who don't understand formal problems as well. Via Roland Piquepaille's Technology Trends, which also has links to some interesting PDFs regarding the language.

However, as most business programmers know, the complicated part of developing software is not in writing the code, but in defining the problem. Depending on how intelligent the Metafor parser is, such tools may help non-technical users prototype their problems by writing sets of stories outlining what they want to achieve. This would have two benefits. In one case, there may be users who have tasks that should be automated by software, but who cannot afford a developer. While definitely not available at the present time, perhaps such story based software could create simple, yet sufficient, applications. In addition, software sketching, especially if a crude program was the result, could help the focus of larger software, making it easier (and cheaper!) to prototype a complicated business problem. In this way, when a developer meets with the business user, they aren't just discussing bullet points and static images, but an actual running program, however crude.

February 21, 2005

Looking for a job? RSS can help

Via buzzhit!, I found jobs.feedster.com. Now, I haven't found online methods to be as useful as some, but I don't deride it like others. It'll be interesting to see how useful jobs-via-RSS become, and I expect further cannibalization of newspaper revenues (as Tony mentions).

February 15, 2005

Article on XmlHttpRequest

XmlHttpRequest popped up on my radar a few months ago when Matt covered it. Back then, everyone and their brother was talking about Google Suggest. Haven't found time to play with it yet, but I like the idea of asynchronous url requests. There's lots of power there, not least the ability to make pull down lists dynamic without shipping everything to the browser or submitting a form via javascript.

I found a great tutorial on XmlHttpRequest by Drew McLellan, who also has a interesting blog. Browser based apps are getting better and better UIs, as Rands notices.

The Economist on Blogging

That bastion of free trade economics and British pithy humor has an article about corporate blogging: Face Value. It focuses on Scoble and Microsoft, but also mentions other bloggers, including Jonathan Schwarz.

There's defintely a fine line between blogging and revealing company secrets. Mark Jen certainly found that out. The quick, informal, personal nature of blogging, combined with its worldwide reach and googles cache, mean that it poses a new challenge to corporations who want to be 'on message'.

It also exposes a new risk for employees and contractors. I blog about all kinds of technologies, including some that I'm paid to use. At what point does the knowledge I gain from a client's project become mine, so that I can post about it? Or does it ever? (Obviously, trade secrets are off limits, but if I discover a better way to use Spring or a solution for a common struts exception, where's the line?) Those required NDAs can be quite chilling to freedom of expression and I have at least one friend who has essentially stopped blogging due to the precarious nature of his work.

February 07, 2005

Database links

I just discovered database links in Oracle. This is a very cool feature which essentially allows you to (nearly) transparently pull data from one Oracle database to another. Combined with a view, you can have a read only copy of production data, real time, without giving a user access to the production database directly.

Along with the above link, section 29 of the Database Administrator's Guide, Managing a Distributed Database, is useful. But I think you need an OTN account to view that link.

February 03, 2005

Networked J2ME Application article up at TSS

An article I wrote about Networked J2ME applications is up at TheServerSide.com. This was based on the talk I gave last year.

January 14, 2005

Options for connecting Tomcat and Apache

Many of the java web applications I've worked on run in the Tomcat servlet engine, fronted by an Apache web server. Valid reasons for wanting to run Apache in front of Tomcat are numerous and include increased clickstream statistics, Apache's ability to quickly and efficiently serve static content such as images, the ability to host other dynamic solutions like mod_perl and PHP, and Apache's support for SSL certificates. This last is especially important--any site with sensitive data (credit card information, for example) will usually have that data encrypted in transit, and SSL is the default manner in which to do so.

There are a number of different ways to deal with the Tomcat-Apache connection, in light of the concerns mentioned above:

Don't deal with the connection at all. Run Tomcat alone, responding on the typical http and https ports. This has some benefits; configuration is simpler and fewer software interfaces tends to mean fewer bugs. However, while the documentation on setting up Tomcat to respond to SSL traffic is adequate, Apache handling SSL is, in my experience, far more common. For better or worse, Apache is seen as faster, especially when when confronted with numeric challenges like encryption. Also, as of Jan 2005, Apache serves 70% of websites while Tomcat does not serve an appreciable amount of http traffic. If you're willing to pay, Netcraft has an SSL survey which might better illuminate the differences in SSL servers.

If, on the other hand, you choose to run some version of the Apache/Tomcat architecture, there are a few different options. mod_proxy, mod_proxy with mod_rewrite, and mod_jk all give you a way to manage the Tomcat-Apache connection.

mod_proxy, as its name suggests, proxies http traffic back and forth between Apache and Tomcat. It's easy to install, set up and understand. However, if you use this method, Apache will decrypt all SSL data and proxy it over http to Tomcat. (there may be a way to proxy SSL traffic to a different Tomcat port using mod_proxy--if so, I was unable to find the method.) That's fine if they're both running on the same box or in the same DMZ, the typical scenario. A byproduct of this method is that Tomcat has no means of knowing whether a particular request came in via secure or insecure means. If using a tool like the Struts SSL Extension, this can be an issue, since Tomcat needs such information to decide whether redirection is required. In addition, if any of the dynamic generation in Tomcat creates absolute links, issues may arise: Tomcat receives requests for localhost or some other hidden hostname (via request.getServerName()), rather than the request for the public host, whichApache has proxied, and may generate incorrect links.

Updated 1/16: You can pass through secure connections by placing the proxy directives in certain virtual hosts:

<VirtualHost _default_:80>

ProxyPass /tomcatapp http://localhost:8000/tomcatapp

ProxyPassReverse /tomcatapp http://localhost:8000/tomcatapp

</VirtualHost>

<VirtualHost _default_:443>

SSLProxyEngine On

ProxyPass /tomcatapp https://localhost:8443/tomcatapp

ProxyPassReverse /tomcatapp https://localhost:8443/tomcatapp

</VirtualHost>

This doesn't, however, address the getServerName issue.

Updated 1/17:

Looks like the Tomcat Proxy Howto can help you deal with the getServerName issue as well.

Another option is to run mod_proxy with mod_rewrite. Especially if the secure and insecure parts of the dynamic application are easily separable (for example, if the application was split into /secure/ and /normal/ chunks), mod_rewrite can be used to rewrite the links. If a user visits this url: https://www.example.com/application/secure and traverses a link to /application/normal, mod_rewrite can send them to http://www.example.com/application/normal/, thus sparing the server from the strain of serving pages needlessly encrypted.

mod_jk is the usual way to connect Apache and Tomcat. In this case, Tomcat listens on a different port and a piece of software known as a connector enables Apache to send the requests to Tomcat with more information than is possible with a simple proxy. For instance, certain variables are sent via the connector when Apache receives an SSL request. This allows Tomcat full knowledge of the state of the request, and makes using a tool like the aforementioned Struts SSL Extension possible. The documentation is good. However using mod_jk is not always the best choice; I've seen some performance issues with some versions of the software. You almost always have to build it yourself: binary releases of mod_jk are few and far between, I've rarely found the appropriate version for my version of Apache, and building mod_jk is confusing. (Even though mod_jk 1.2.8 provides an ant script, I ended up using the old 'configure/make/make install' process because I couldn't make the ant script work.)

In short, there are plenty of options for connecting Tomcat and Apache. In general, I'd start out using mod_jk, simply because that's the option that was built specifically to connect the two; mod_proxy doesn't provide quite the same level of integration.

December 24, 2004

ITConversations and business models

ITConversations is a great resource for audio conversations about technology. Doug Kaye, the owner/manager/executive assistant of IT Conversations, started a wiki conversation last month about that constant bugbear of all websites with free content: funding. Now, when you use 4.2 terrabytes a month of bandwidth, that problem is more intense than average; the conversation is still a worthwhile read for anyone trying to monetize their weblog or open content source.

December 07, 2004

Wireless application deployment solutions

It looks like there's another third party deployment solution being touted. Nokia is offering deployment services for distribution of wireless applications called Preminet Solution (found via Tom Yager). After viewing the hideous Flash presentation and browsing around the site (why oh why is the FAQ a PDF?) this solution appears to be very much like BREW, with perhaps a few more platforms supported (java and symbian are two that I've found so far). Apparently the service isn't launched yet, because when I click on the registration link, I see this:

"Please note that Preminet Solution has been announced, but it is not yet commercially launched. The Master Catalog registration opens once the commercial launch has been made."

For mass market applications, it may make sense to use this kind of service because the revenue lost by due to paying Nokia and the operators is offset by more buyers. However, if you have a targeted application, I'm not sure it's worthwhile. (It'll depend on the costs, which I wasn't able to find much out about.)

In addition, it looks like there's a purchasing application that needs to be downloaded or can be installed on new phones. I can't imagine users wanting to download another application just so they can buy a game, so widespread acceptance will probably have to wait until the client is distributed with new phones.

It'll be interesting to see how many operators pick up on this. It's another case of network effects (at least for application vendors); we'll see if Nokia can deliver the service needed to make Preminet the first choice for operators.

Anyway, wonder if this competitor is why I got an email from Qualcomm touting cheaper something or other? (Didn't really look at it, as I've written brew off until a J2ME bridge is available.)

December 05, 2004

Instant messaging and Yahoo!'s client

Like many folks, I've grown to depend on instant messaging (IM--it's also a verb, to IM is to 'instant message'), in the workplace. It's a fantastic technology, but two years ago, I turned up my nose at it. (Of course, 5 years ago, in a similar manner, I turned up my nose at a cell phone, and now I wouldn't be caught dead without it, so perhaps I'm not the best prognosticator.) What can it possibly offer that email can't? I'm going to examine it from the perspective of a software developer, since that's what I know; from that perspective, there are two main benefits IM offers that email doesn't, timeliness and presence:

I didn't think it was possible, but email can be too formal at times. When you have a question that needs to be answered right away or it becomes superfluous, IM is perfect. If it's a question about consistency of API or an area that you know the recipient knows much better than you, sometimes 30 seconds of their time can be worth 15 minutes of yours. Of course, there's a judgment call to be made; if you're constantly IMing questions about the API of java.lang.String, you risk breaking up the answerer's flow. However, used in moderation, it can greatly increase the communication between team members, especially when it's a distributed team.

Presence is also a huge benefit of most IM software. This means that you have a list of 'buddies' that the IM software monitors for you. When each signs on or signs off, you're made aware of that fact. This means that you can tell whether it's worthwhile calling someone with a deeper question, or if you should just compose an email. The technical details of presence are being codified at the IETF and I foresee this becoming more and more useful, because it's a non intrusive way for folks to manage their availability. It fulfills some of the same functions as a 'door closed/door opened' policy in an office, extending worldwide.

I use Yahoo IM because it fits my needs. Russell Beattie has recently written an overview of the main competitors and their clients, but technical geegaws like integration with music really don't matter all that much to me. Much more important are:

1. Does everyone I need to talk to have an account? How easy is it for them to get an account?

2. Does it have message archiving? How searchable are such archives?

3. How stable is the client?

That's about all I considered. I guess I let my contrarian streak speak too--I'm not a big fan of Microsoft, so I shyed away from Windows Messenger. There are some nice additional features, however. The ability to have a chat session, so that you can IM to more than one person at once, a la IRC, is nice. Grouping your buddies is great--each company I've consulted/contracted for has their own group in my IM client. I just discovered these instructions to put presence information on a web page. Combined with Maven and its intranet, or just put on any intranet page, this could be a useful tool for developers.

(I just read the Terms of Service for Yahoo, and I didn't see any prohibitions on commercial use of Yahoo Messenger; however, there are a couple of interesting clauses that anyone using it should be aware of. In section 3, I found out that Yahoo can terminate your account if your information is not kept up to date (not really enforceable, eh?). And in section 16, "[y]ou agree not to access the Service by any means other than through the interface that is provided by Yahoo! for use in accessing the Service." I wonder if that prohibits Trillian?)

One issue I have with the Yahoo client is the way status works. Presence is not a binary concept (there/not there); rather, it is broken down into various statuses--(not there/available/busy/out to lunch...). What I find myself doing is being very conscientious about changing my status from available to unavailable. However, I rarely remember to change back, which degrades the usefulness of the presence information. (If you have to ping someone over IM to see if they're actually there, it means you might as well not have status information at all.) I spent some time browsing the preferences of Yahoo's client, as well as googling, but didn't find any way to have the client pop up a message the first time I IM someone when my status is not available.

IM is very useful, and I can't imagine working without it now. I don't know what I'm going to do when Yahoo starts charging for it.

December 02, 2004

NextBus: a mobile poster child

I think that NextBus is a fantastic example of a mobile application. This website, which you can access via your mobile phone, tells you when the next bus, on a particular line, is coming. So, if you're out and about and have had a bit much to drink, or if you've just forgotten your bus schedule, you can visit their site and find out when the next bus will be at your stop. It's very useful.

This is almost a perfect application for a mobile phone. The information needed is very time sensitive and yet is easy to display on a mobile phone (no graphics or sophisticated data entry needed). NextBus has a great WAP interface, which probably displays well on almost every modern phone. The information is freely available (at least, information on when the next bus is supposed to arrive is freely available--and this is a good substitute for real time data).

And yet, there are profound flaws in this service. For one, it abandons a huge advantage by not knowing (or at least remembering) where I am. When I view the site to find out when the 203 is coming by next, I have to tell the site that I'm in Colorado, and then in Boulder. The website is a bit better, remembering that I am an RTD customer, but the website is a secondary feature for me--I'm much more interested in information delivered to my phone.

Also, as far as I can tell, the business model is lacking (and, no, I haven't examined their balance sheets). I don't know how NextBus is going to make money, other than extracting it from those wealthy organizations, the public transportation districts. (Yes, I'm trying to be sarcastic here.) They don't require me to sign in or pay anything for the use of their information, and I see no advertising.

So, a service that is almost perfect for the mobile web because of the nature of the information it conveys (textual and time sensitive) is flawed because it's not as useful as it could be and the business model is up in the air. I can't imagine a better poster child for the mobile Internet.

November 28, 2004

Syndication and blogs

I've tried to avoid self-referential blogging, if only becuase I'm not huge into navel staring. But, I just ran across an interesting blog: Wendyopolis, which is apparently associated with a Canadian magazine. Now, according to google, blogs are defined as:

"A blog is basically a journal that is available on the web. The activity of updating a blog is "blogging" and someone who keeps a blog is a "blogger." Blogs are typically updated daily using software that allows people with little or no technical background to update and maintain the blog.

Postings on a blog are almost always arranged in chronological order with the most recent additions featured most prominently."

(From the Glossary of Internet Terms)

However, I'd argue that there are several fundamental characteristics of a blog:

1. Date oriented format--"most recent additions featured most prominently."

2. Informal, or less formal, writing style.

3. Personal voice--a reader can associate a blog with a person or persons.

4. Syndicatability--the author(s) provide RSS or Atom feeds. The feeds may be crippled in some way, but they are available.

5. Permalinks--postings are always available via an unchanging URL.

I can't really think of any other salient characteristics. But the reason for this post is that Wendyopolis, which looks to be a very interesting weblog, doesn't have #4. In some ways, that's the most important feature, because it allows me to pull content of interest to one location, rather than visit sites.

I've written about this before, so I won't beat a dead horse. Suffice it to say that, while Wendyopolis may speak to me right now, the chances of me ever visiting that blog ever again are nil, because of the lack of syndication. Sad, really.

September 20, 2004

Open Books at O'Reilly

I'm always on the lookout for interesting content on the internet. I just stumbled across Free as in Freedom, an account of Richard Stallman, which is published under the umbrella of the Open Books Project.

September 15, 2004

Relearning the joys of DocBook

I remember the first time I looked at Simple DocBook. I have always enjoyed compiling my writing--I wrote my senior thesis using LaTeX. When I found DocBook, I was hooked--it was easier to use and understand than any of the TeX derivatives, and the Simplified grammar had just what I needed for technical documentation. I used it to write my JAAS article.

But, I remember it being a huge hassle to set up. You had to download openjade, compile it on some systems, set up some environment variables, point to certain configuration files and in general do quite a bit of fiddling. I grew so exasperated that I didn't even setup the XML to PDF conversion, just the XML to HTML.

Well, I went back a few weeks ago, and found things had improved greatly. With the help of this document explaining how to set DocBook up on Windows, I was able to generate PDF and HTML files quickly. In fact, with the DocBook XSL transformations and the power of FOP, turning a Simplified DocBook article into a snazzy looking PDF file is as simple as this (stolen from here):

java -cp "C:\Programs\java\fop.jar; \

C:\Programs\java\batik.jar;C:\Programs\java\jimi-1.0.jar; \

C:\Programs\java\xalan.jar; C:\Programs\java\xerces.jar; \

C:\Programs\java\logkit-1.0b4.jar;C:\Programs\java\avalon-framework-4.0.jar" \org.apache.fop.apps.Fop -xsl \ "C:\user\default\xml\stylesheets\docbook-xsl-1.45\fo\docbook.xsl" \ -xml test.xml -pdf test.pdf

Wrap that up in a shell script, and you have a javac for dcuments.

September 14, 2004

Abstractions, Climbing and Coding

I vividly remember a conversation I had in the late 1990s with a friend in college. He was an old school traditional rock climber; he was born and raised in Grand Teton National Park. We were discussing technology and the changes it wreaks on activities, particularly climbing. He was talking about sport climbing. (For those of you not in the know, there are several different types of outdoor rock climbing. The two I'll be referring to today are sport climbing and traditional, or trad, climbing. Sport climbers clip existing protection to ensure their safety; traditional climbers insert their own protection gear into cracks.) He was not bagging on sport climbing, but was explaining to me how it opened up the sport of climbing. A rock climber did not need to spend as much money acquiring equipment nor as much time learning to use protection safely. Instead, with sport climbing, one could focus on the act of climbing.

At that moment it struck me that what he was saying was applicable to HTML generation tools (among many, many other things). During that period, I was just becoming aware of some of the WYSIWYG tools available for generating HTML (remember, in the late 1990s, the web was still gaining momentum; I'm not even sure MS Word had 'Save As HTML' until Word 97). Just like trad versus sport, there was an obvious trade off to be made between hand coding HTML and using a tool to generate it. The tool saved the user time, but acted as an abstraction layer, clouding the user's understanding of what was actually happening. In other words, when I coded HTML from hand, I understood everything that was going on. On the other hand, when I used a tool, I was able to make snazzier pages, but didn't understand what was happening. Let's just repeat that—I was able to do something and have it work, all without understanding why it worked! How powerful is that?

This trend, towards making complicated things easier happens all the time. After all, the first cars were difficult to start, requiring hand cranking, but now I just get in the car and turn the key. This abstraction process is well and good, as long as we realize it is happening and are willing to accept the costs. For there are costs, in climbing, but also in software. Joel has something to say on this topic. I saw an example of this cost myself a few months ago, when Tomcat was not behaving as I expected, and I had to work around an abstraction that had failed. I also saw a benefit to this process of abstraction when I was right out of school. In 1999, there was not the body of frameworks and best practices that currently exist. There was a lot of invention from scratch. I saw a shopping cart get built, and wrote a user authentication and authorization system myself. These were good experiences, and it was much easier to support this software, since it was understood from the ground up by the authors. But, it was hugely expensive as well.

In climbing terms, I saw this trade off recently when I took a friend (a much better climber than I) trad climbing. She led a pitch far below her climbing level, and yet was twigged out by the need to place her own protection. I imagine that's exactly how I would feel were I required to fix my brakes or debug a compiler. Dropping down to a lower abstraction takes energy, time, and sometimes money. Since you only have a finite amount of time, you need to decide at what abstraction level you want to sit. Of course, this varies depending the context; when you're working, the abstraction level of Visual Basic may be just fine, because you just need to get this small application written (though you shouldn't expect such an application to scale to multiple users). When you're climbing, you may decide that you need to dig down to the trad level of abstraction in order to go the places you want to go.

I recently read an interview with Richard Rossiter, who has written some of the canonical guidebooks for front range area climbing. When asked where he thought "climbing was going" Rossiter replied: "My guess is that rock climbing will go toward safety and predictability as more and more people get involved. In other words, sport climbing is here to stay and will only get bigger...." A wise prediction; analogous to my prediction that sometimes understanding the nuts and bolts of an application simply isn't necessary. I sympathize. I wouldn't have wanted to go climbing with hobnail boots and manila ropes, as they did in the old days; nor would I have wanted to have to write my own compiler, as many did in the 1960s. And, as my college friend pointed out, sport climbing does make climbing in general safer and more accessible; you don't have to invest a ton of time learning how to fiddle with equipment that will save your life. At the same time, unless you are one of the few who places bolts, you are trusting someone else's ability to place equipment that will save your life. Just like I've trusted DreamWeaver to create HTML that's readable by browsers—if it does not, and I don't know HTML, I have few options.

Note, though, that it is silly for folks who sit at one level of abstraction to denigrate folks at another. After all, what is the real difference between someone using a compiler and someone using DreamWeaver? They're both trying to get something done, using something that they probably don't understand. (And if you understand compilers, do you understand chip design? How about photo-lithography? Quantum mechanics? Everyone uses things they don't understand at some level.)

It is important, however, to realize that even if you are using a higher abstraction level, there's a certain richness and joy that can't be achieved unless you're at the lower level. (The opposite is true as well—I'd hate to deal with strings instead of classes all the time; sport climbing frees me to enjoy movement on the rock.) Lower levels tend to be more complicated (that's what abstraction does—hides complex 'stuff' behind a veneer of simplicity), so fewer folks enjoy the benefits of, say, trad climbing or compiler design. Again, depending on context, it may be well worth your while to dip down and see whether an activity like climbing or coding can be made more fulfilling by attacking it at a lower level. You'll possibly learn a new skill, which, in the computer world can be a career helper, and in the climbing world may save your life at some time. You'll also probably appreciate the higher level activities if and when you head back to that level, because you'll have an understanding of the mental and temporal savings that the abstraction provides.

September 10, 2004

Slackware to the rescue

I bought a new Windows laptop computer about nine months ago, to replace my linux desktop that I purchased in 2000. Yesterday, I needed to check to see if I had a file or two on the old desktop computer, but I hadn't logged in for eight months; I had no idea what my password was. Now, I should have a root/boot disk set, even though floppy disks are going the way of cursive. But I didn't. Instead, I had the slackware installation disks from my first venture into linux: a IBM PS/2, with 60 meg of hard drive space, in 1997. I was able to use those disks to load a working, if spartan, linux system into RAM. Then, I mounted the boot partition and used sed (vi being unavailable) to edit the shadow file:

sed 's/root:[^:]*:/root::/' shadow > shadow.new

mv shadow.new shadow

Unmount the partition, reboot, pop the floppy out, and I'm in to find that pesky file. As far as I know, those slackware install disks are the oldest bit of software that I own that still is useful.

September 02, 2004

New approach to comment spam

Well, after ignoring my blog for a week, and dealing with 100+ comment spams, I'm taking a new tack. I'm not going to rename my comments.cgi script anymore, as that seems to have become less effective.

Instead, I'm closing all comments on any older entry that doesn't have at least 2 comments. When I go through and delete any comment spam, I just close the entry. This seems to have worked, as I've dealt with 2-3 comment spams in the last week, rather than 10+.

I've also considered writing a bit of perl to browse through Movable Types DBM database to ease the removal of 'tramadol' entries (rather than clicking my way to carpal tunnel). We'll see.

(I don't even know what's involved in using MT-Blacklist. Not sure if the return would be worth the effort for my single blog installation.)

Back to google

So, the fundamental browser feature I use the most is this set of keystrokes:

* cntrl-T--open a new tab

* g search term--to search for "search term"

(I set up g so the keyword expands and points to a search engine.)

Periodically, I'll hear of a new search engine--a google killer. And I'll switch my bookmark so that 'g' points to the new search engine. I've tried AltaVista, Teoma and, lately, IceRocket. Yet, I always return to Google. The others have some nice features--IceRocket shows you images of the pages--and the search results are similar enough. What keeps me coming back to google is the speed of the result set delivery. I guess my attention span has just plain withered.

Anyone else have a google killer I should try?

July 01, 2004

An open letter to Climbing magazine

Here's a letter to Climbing magazine. I'm posting it here because I think that the lessons Climbing is learning, especially regarding the Internet, are relevant to every print magazine.

--------------------

I just wanted to address some of the issues raised in the Climbing July 2004 Editorial, where you mention that you've cut back on advertising as well as touching on the threat to Climbing from website forums. First off, I wanted to congratulate you on adding more content. If you're in the business of delivering readers to advertisers you want to make sure that the readers are there. It doesn't matter how pretty the ads are--Climbing is read for the content. I'm sure it's a delicate balance between (expensive) content that readers love and (paid) advertisements which readers don't love; I wish you the best in finding that balance.

I also wanted to address forums, and the Internet in general. I believe that websites and email lists are fantastic resources for finding beta, discussing local issues, and distributing breaking news. Perhaps climbing magazines fulfilled that need years ago, but the cost efficiencies of the Internet, especially when amateurs provide free content, can be hard to beat. But, guess what? I don't read Climbing for beta, local issues, or breaking news. I read Climbing for the deliberate, beautiful articles and images. This level of reporting, in-depth and up-close, is difficult to find on the web. Climbing should continue to play to the strengths of a printed magazine--quality, thoughtful, deliberate articles and images; don't ignore breaking news, but realize that's not the primary reason subscribers read it. I don't see how any magazine can compete with the interactivity of the Internet, so if Climbing wants to foster community, perhaps it should run a mailing list, or monitor rec.climbing (and perhaps print some of the choice comments). I see you do run a message board on climbing.com--there doesn't look to be much activity--perhaps you should promote it in the magazine?

Now for some concrete suggestions for improvement. One of my favorite sections in Climbing is 'Tech Tips.' I've noticed this section on the website--that's great. But, since this information is timeless, and I've only been a subscriber for 3 years, I was wondering if you could reprint older Tech Tips, to add cheap, useful content to Climbing. Also, I understand the heavy emphasis on the modern top climbers--these are folks that have interesting, compelling stories to tell, which are interesting around the world. Still, it'd be nice to see 'normal' climbers profiled as well, since most of us will never make a living climbing nor establish 5.15 routes, but all climbers have stories to share. And a final suggestion: target content based on who reads your magazine. Don't use just a web survey, as that will be heavily tilted in favor of the folks who visit your website (sometimes no data is better than skewed data). Instead find out what kind of climbers read your magazine in a number of ways: a web survey, a small survey on subscription cards, paper surveys at events where Climbing has presence, etc. This demographic data will let you know if you should focus on the latest sick highball problem, the latest sick gritstone headpoint or the latest sick alpine ascent.

Finally, thanks for printing a magazine worth caring about.

--------------------

June 26, 2004

Friendster re-written in PHP

Friendster is still alive and kicking, and according to

I check in, periodically, to Friendster to see if anyone new has joined, or added a new picture, or come up with a new catchy slogan for themselves. When I joined, it was daily, now it's monthly. One of the things that detracted from the experience was the speed of the site. It was sloooow. Well, they've dealt with that--it's now a peppy site (at least on Saturday morning). And it appears that one of the ways they did this was to switch from JSP to PHP. Wow. (Some folks noticed a while ago.) I wasn't able to find any references comparing the relative speed of PHP and JSP, but I certainly appreciate Friendster's new responsiveness.

June 15, 2004

Symlinks and shortcuts and Apache

So, I'm helping install Apache on a friend's computer. He's running Windows XP SP1, and Apache has a very nice page describing how to install on Windows. A few issues did arise, however.

1. I encountered the following error message on the initial startup of the web server:

[Tue Jun 15 23:09:11 2004] [error] (OS 10038)An operation was attempted on something that is not a socket. : Child 4672: Encountered too many errors accepting client connections. Possible causes: dynamic address renewal, or incompatible VPN or firewall software. Try using the Win32DisableAcceptEx directive.

I read a few posts online that suggested I could just follow the instructions--I did and just added the Win32DisableAcceptEx directive to the bottom of the httpd.conf file. A restart, and now localhost shows up in a web browser.

2. Configuration issues: My friend also has a firewall on his computer (good idea). I had to configure the firewall to allow Apache to receive packets, and respond to them. Also, I had to configure the gateway (my friend shares a few computers behind one fast internet connection) to forward the port that external clients can request information from to the computer on which Apache was running. Voila, now I can view the default index.html page using his IP address.

3. However, the biggest hurdle is yet to come. My friend wants to server some files off one of his hard drives (a different one than Apache is installed upon). No problem on unix, just create a symlink. On windows, I can use a shortcut, right? Just like a symlink, they "...can point to a file on your computer or a file on a network server."

Well, not quite. Shortcuts have a .lnk extension, and Apache doesn't know how to deal with that, other than to serve it up as a file. I did a fair bit of searching, but the only thing I found on dealing with this issue was this link which basically says you should just reconfigure Apache to have its DocRoot be the directory which contains whatever files you'd like to serve up. Ugh.

However, the best solution is to create an Alias (which has helped me in the past) to the directories you're interested in serving up. And now my friend has Apache, installed properly as a service, to play around with as well.

May 28, 2004

Death marchs and Don Quixote